|

I am currently a graduate student pursuing a Master's degree in Computer Science with a specialization in Artificial Intelligence at UC San Diego. Prior to my academic journey, I had the privilege of working as a Deep Learning Researcher at Reprhase.ai, where I was deeply immersed in cutting-edge AI technologies. At Reprhase.ai, I tackled a particularly challenging problem involving the generation of talking head videos from a single portrait image and an accompanying audio file. Additionally, I conceptualized and developed a proof of concept for a prosody correction deep learning model, which proved effective in rectifying prosody mismatches in audio files. Before my tenure at Reprhase.ai, I honed my skills as an Engineer at Udaan, where I spent approximately 10 months focusing on AI solutions for robotic systems. My journey into the professional world began at SAP Labs India, where I served as a Full-Stack Developer. My academic and research journey commenced with a rewarding internship with the STARS team at Inria, Sophia Antipolis, where I collaborated with Dr. Francois Bremond for six months. During this period, my research interests centered on Multimodal Emotion and Personality Recognition. Additionally, I engaged in remote collaboration with Dr. Andrew Melnik from the University of Bielefeld, working on the development of neural networks to address complex physics challenges. Furthermore, I had the privilege of working with the WZL Lab, RWTH Aachen, where I leveraged machine learning techniques to address measurement uncertainties in mechanical processes. I earned my Bachelor's degree in Information Technology from IIIT Allahabad, where I had the invaluable opportunity to work under the mentorship of Dr. Rahul Kala. Our collaborative efforts focused on enhancing the localization accuracy of traditional SLAM (Simultaneous Localization and Mapping) algorithms through the application of deep learning techniques. For a more detailed overview of my qualifications, please refer to my Resume. |

|

|

In general, my interests lie in the realms of computer vision, machine learning, reinforcement learning, and physics. I am particularly fascinated by interdisciplinary research that allows me to leverage artificial intelligence in order to address complex challenges within the fields of healthcare, medical science, and robotics. My enthusiasm for exploring these domains is fueled by the potential to uncover innovative solutions that can positively impact the world and improve the human experience. |

|

|

|

|

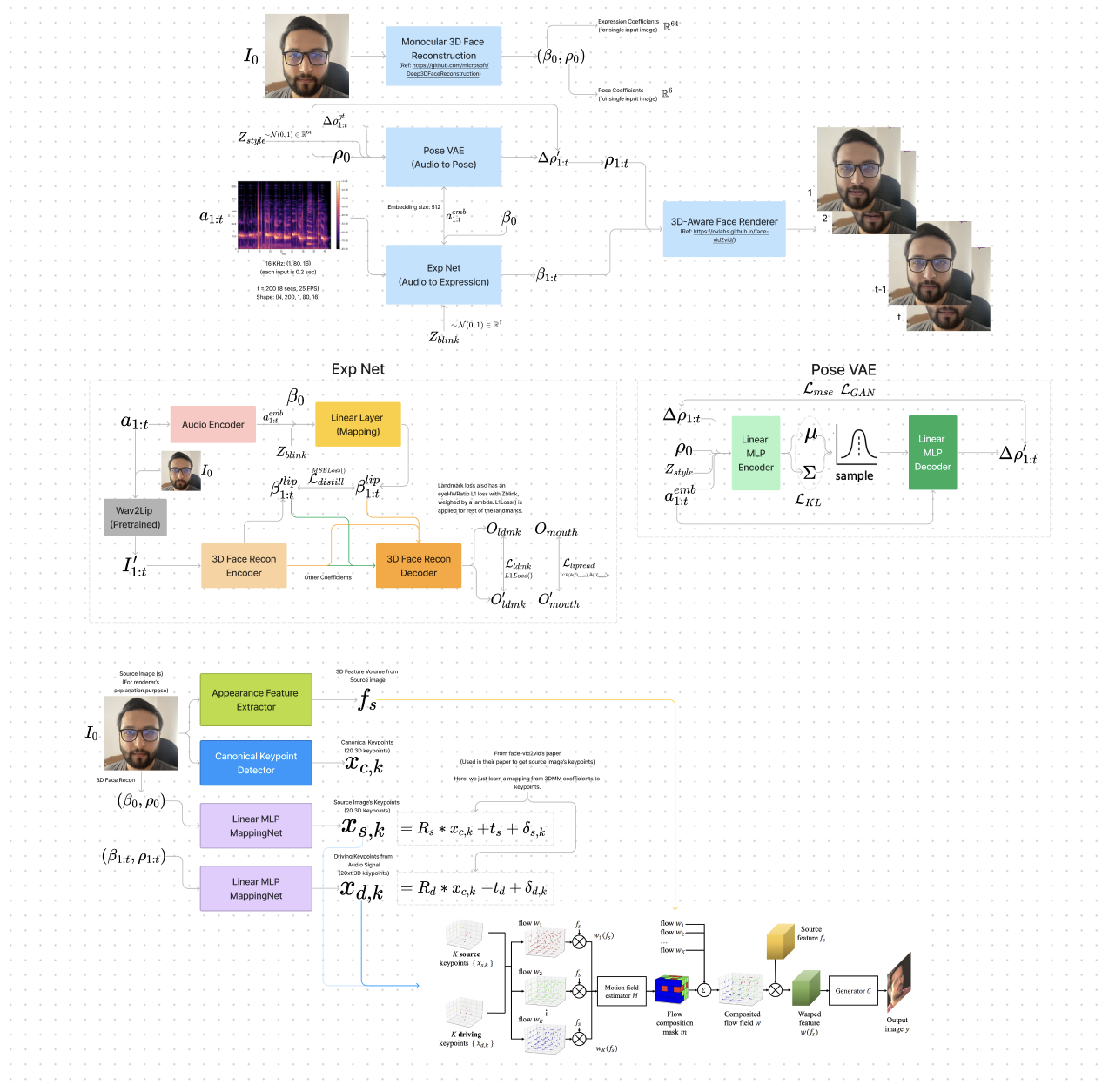

This research project aims to develop an in-house solution for generating realistic talking head videos from a single portrait image and an accompanying audio file. The core of this solution is a sophisticated deep learning pipeline. It starts by taking both the image and audio as inputs. The audio goes in an expression generation network, extracting expression features from the audio data for each frame and representing them in a 3D morphable model (3DMM) parameter space. These 3DMM parameters, along with the original portrait image, are subsequently passed to a face renderer, which generates facial animations for each frame. These frames are then seamlessly combined to produce the final talking head video, faithfully synchronized with the provided audio, offering a visually coherent output. The video on the left (hover over the image on the left) of the description shows a sample of a generated video. |

|

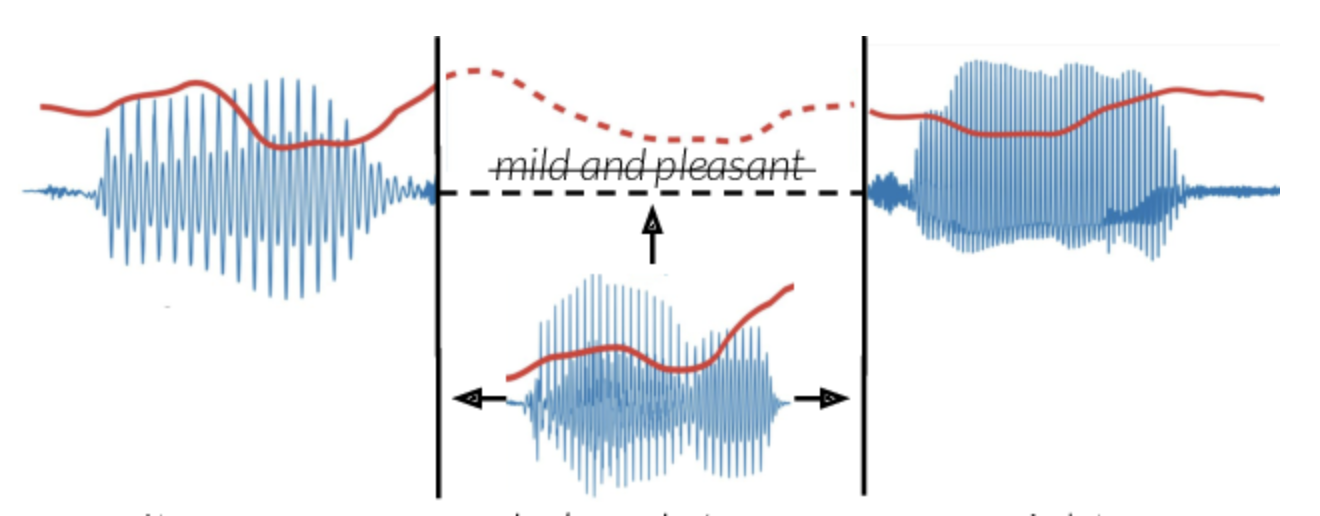

Project goal: Develop a deep learning model to match prosody of single word to prosody of sentence using two audio samples - target word spoken by respeecher (or any other TTS) and an entire sentence spoken by speaker. The model consists of two stages: first, a CNN network processes the audio's mel spectrogram, correcting the word's prosody while preserving its content. Then, a W-GAN pipeline uses this intermediate mel spectrogram to generate a corrected mel spectrogram. When this corrected spectrogram is decoded, it produces a seamless audio with the desired prosody corrections. |

|

|

Airavat is an autonmous forklift which is capable of navigating itself and pick/put items in a warehouse. It uses stereo camera (installed on the front of the bot) and other sensors for localizing itself in an environment. Localization is also assisted by a map of April Tags placed strategically in the warehouse which help the bot to localize itself more robustly. Moreover, the april tags also serve as the control points for a bezier curve which defines the trajectory of the robot. The video on the left (hover over the image on the left and zoom-in) of the description shows a simulation of Airavat following a pre-defined trajectory. |

|

|

|

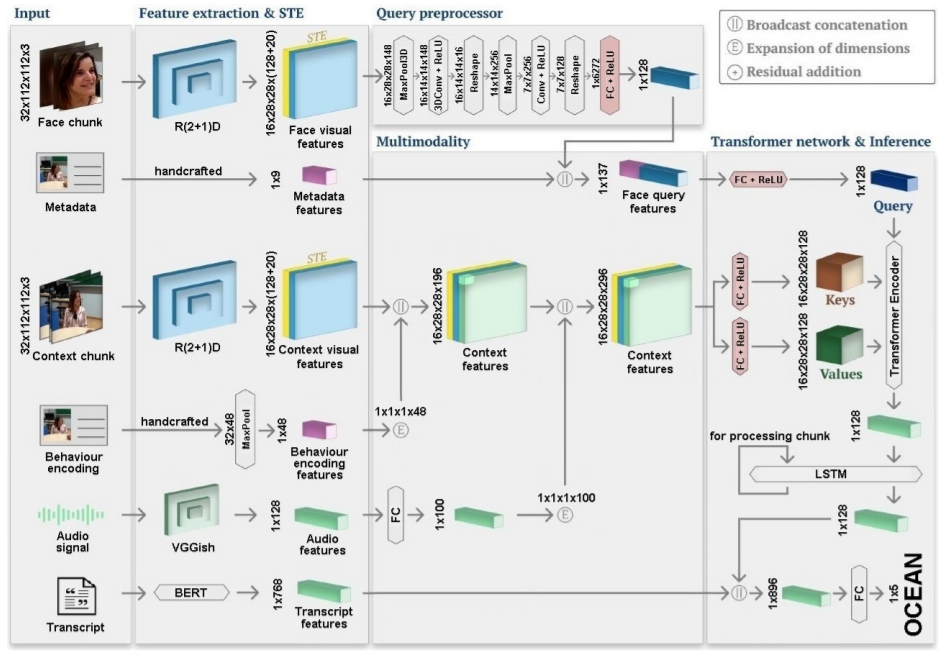

Tanay Agrawal, Dhruv Agarwal, Michal Balazia, Neelabh Sinha, Francois Bremond International Conference on Computer Vision Theory and Applications (VISAPP), 2022 arXiv Personality recongnition using cross-attention transformers on multiple modalities and hand-crafted behaviour encodings. |

|

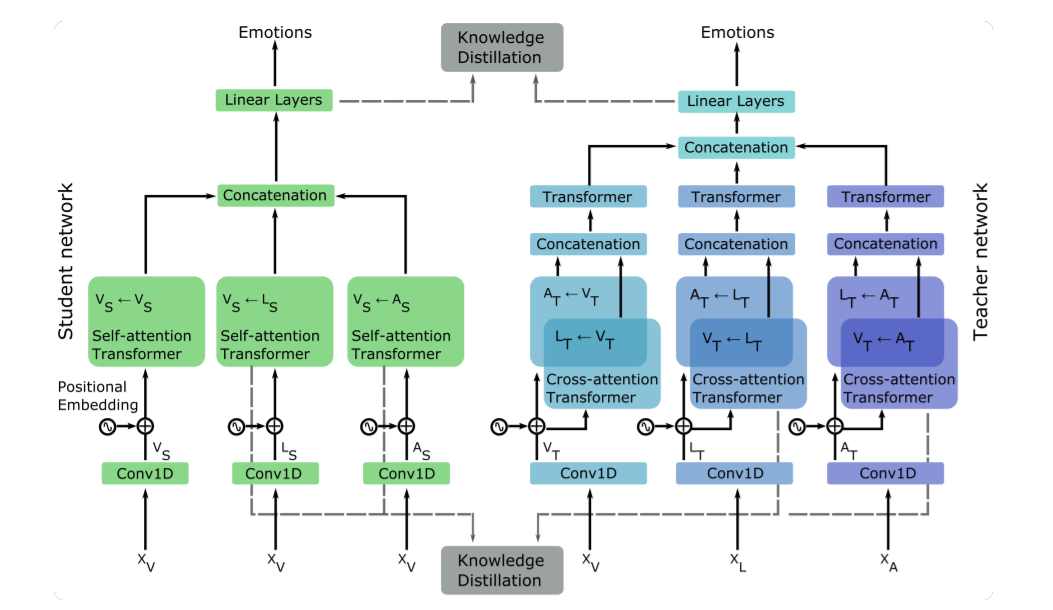

Dhruv Agarwal, Tanay Agrawal, Laura M Ferrari, Francois Bremond Advanced Video and Signal-based Surveillance (AVSS), 2021 arXiv / Slides A new approach to applying knowledge distillation in transformers. |

|

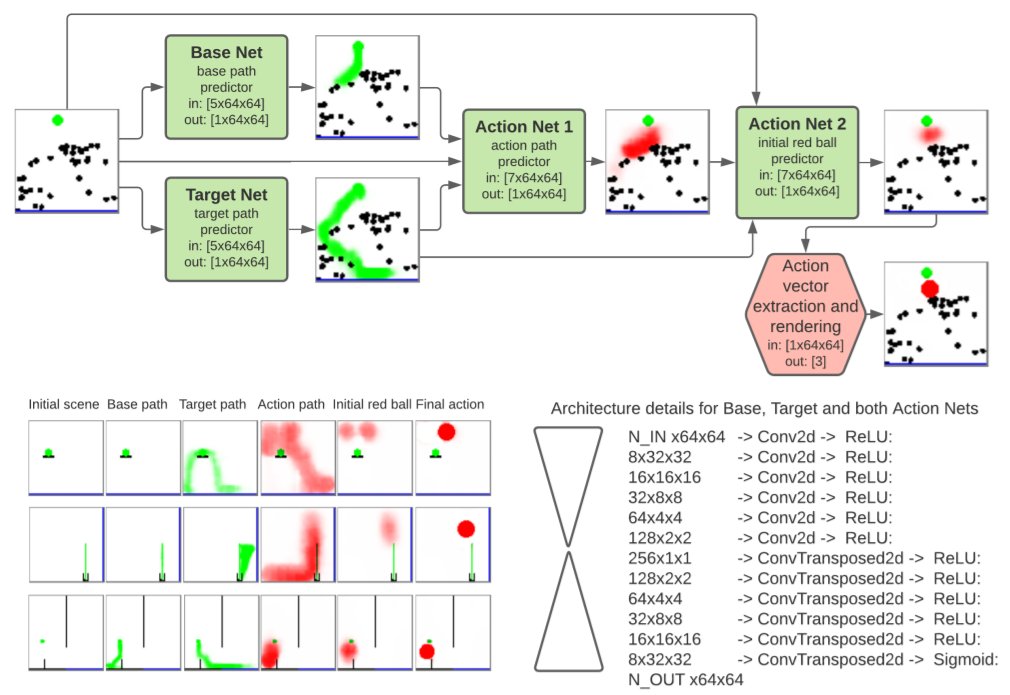

Augustin Harter, Andrew Melnik, Gaurav Kumar, Dhruv Agarwal, Animesh Garg, Helge Ritter NeurIPS workshop on Interpretable Inductive Biases and Physically Structured Learning, 2020 arXiv / Video / Code A new deep learning model for goal-driven tasks that require intuitive physical reasoning and intervention in the scene to achieve a desired end goal. |

|

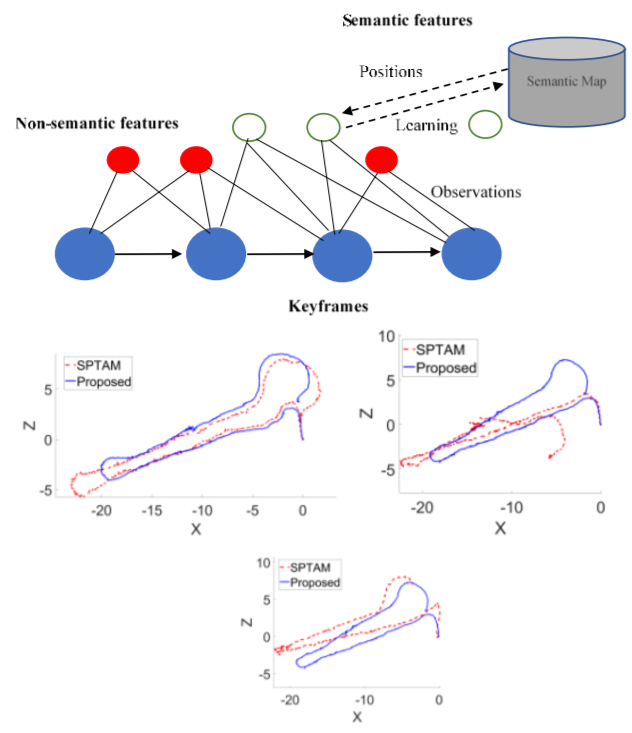

Ambuj Agrawal, Dhruv Agarwal, Mehul Arora, Ritik Mahajan, Shivansh Beohar, Lhilo Kenye, Rahul Kala. ( equal contribution ) Mediterranean Conference on Control and Automation, 2022 Paper Improved localization accuracy of V-SLAM by injecting semantic information of detected corner points from images captured by a robot. Detected object in a scene were used for place recognition and correspondence matching which further enhanced the semantic information provided to V-SLAM module. |

|

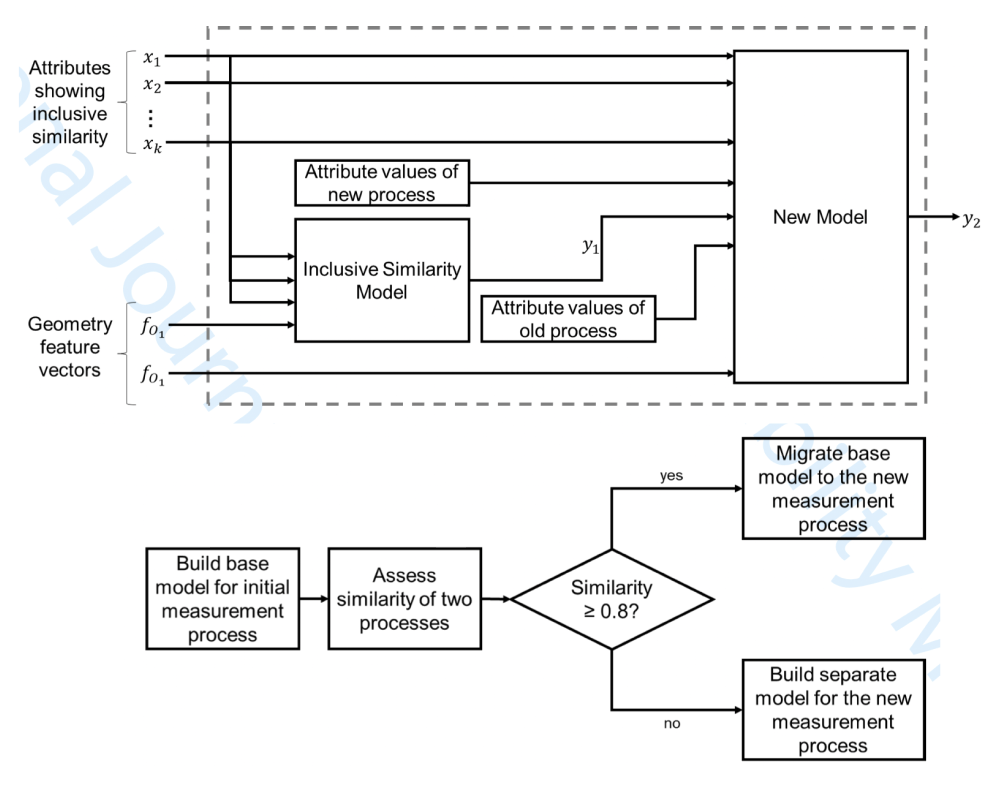

Dhruv Agarwal, Meike Huber, Robert Schmitt. International Journal of Quality & Reliability Management (IJQRM), 2022 Paper The determination of the measurement uncertainty is relevant for all measurement processes. In production engineering, the measurement uncertainty needs to be known to avoid erroneous decisions. However, its determination is associated to high effort due to the expertise and expenditure that is needed for modelling measurement processes. Once a measurement model is developed, it cannot necessarily be used for any other measurement process. In order to make an existing model usable for other measurement processes and thus to reduce the effort for the determination of the measurement uncertainty, a procedure for the migration of measurement models has to be developed. |

|

|

|

Dhruv Agarwal, Mehul Arora, Gillian McMahon, Jillian Sweetland, Shivansh Tiwari, Shriya Shetty. [Hackathon Presentation] The project won first place in the new venture track of soonami venturethon 2023. We developed an AI-powered automatic charting solution for medical practitioners which liberates the healthcare provider from charting while seeing a patient. Our solution captures all the relevant information from the conversation of healthcare provider and patient, and translates it into a well-structured format. |

|

|

Dhruv Agarwal, Apoorv Agnihotri, Amit. [Code] The project won first place in the generative AI track of an internal hackathon conducted at RephraseAI. ChatComix is your portal to an infinite world of interactive comics. With ChatComix, one can generate new comics at random by just specifying the comic characteristics like genre, story length, etc. |

|

|

|

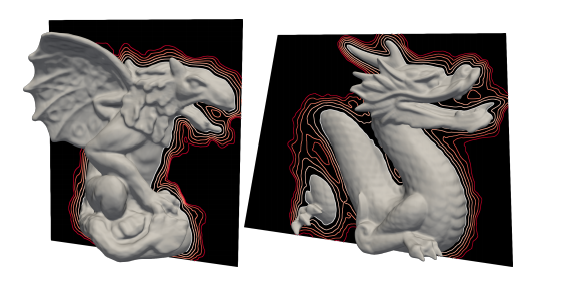

The project contains an unofficial implementation of the Phase Transitions, Distance Functions, and Implicit Neural Representations paper. The paper proposes a new loss function, phase loss, which improves the 3D reconstruction performance for Implicit Neural Representation. The project implements the phase loss proposed in the paper and an optional fourier layer in the network which further enhances the performance for high frequency signals |

|

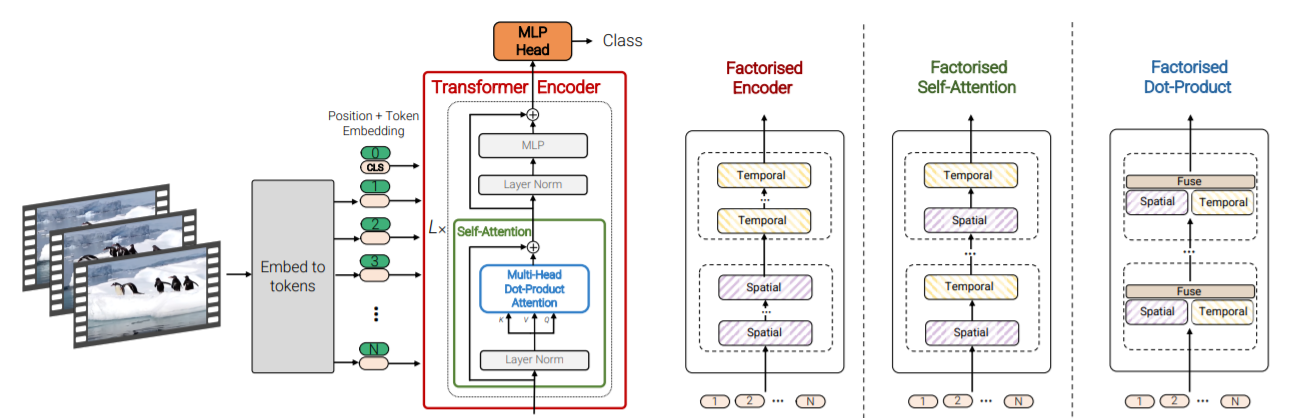

The project contains an unofficial implementation of the Video Vision Transformer (ViViT) paper. Owing to the recent success of transformers in image classification task, the paper presents a pure transformer architecture which can be exploited for video classification or for extracting rich features from videos. |

|

Implementation various state-of-the-art reinforcement learning algorithms to make an AI agent learn to play Doom, Space Invaders, Sonic Hedgehog 2, etc. |

|

A repertoire of Deconvolutional GAN models trained to output artificial human face images. The models were trained on the CelebA dataset |

|

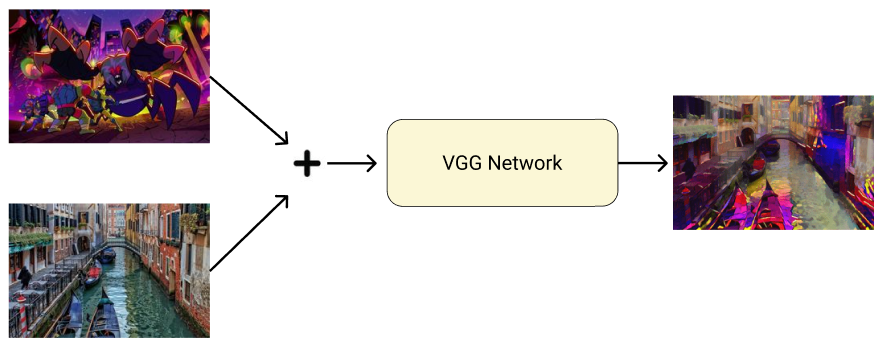

A VGG model trained using Neural Style Transfer technique to generate artisitic images from a base image and a style image. |

|

|

|

|

Design and source code from Jon Barron's website |